Generative AI has emerged as the tech trendsetter of 2023. As we look at our favorite technologies, it's hard to miss how integrally woven generative AI features have become in our favorite applications. From interacting directly with Large Language Models (LLMs) like ChatGPT to marveling at the capabilities of image generation models such as DALL·E and Stable Diffusion, the influence of generative AI is undeniable. However, the potential of this powerful technology is not limited to consumer tech applications. Those working in the world of machine learning and artificial intelligence have likely already noticed the rise of synthetic data generation platforms, which leverage generative AI to produce training data for AI systems. These platforms, and the companies building them, fit into the MLOps ecosystem — the specialized domain focusing on the tools and processes that product and engineering teams rely on to build, refine, and deploy machine learning models effectively.

MLOps is the space that we at Manot have been committed to for the last few years. Our platform empowers all members of machine learning teams to gain insights in their model’s performance, eliminate model blind spots, and curate novel data on unseen scenarios that can be used to improve the model’s accuracy and patch its weaknesses. Today, we’re thrilled to announce that we’ve taken the expertise we have built in data curation and model performance evaluation, and merged it with the latest in generative AI technology to build Manot’s GenAI module, a product that allows you to uncover new scenarios the model was not exposed to during training, and generate targeted, high-quality data that improves your computer vision model’s performance.

Understanding Where Generative AI Fits into the Machine Learning Lifecycle

Data curation lies at the heart of the machine learning lifecycle. The essence of a model's understanding of the world is shaped by its training data. Therefore, it's imperative to ensure this data accurately mirrors the model's operational environments to achieve the best accuracy. However, continuously sourcing training data data from the real world is both costly and time-consuming, leading to long and tenuous feedback loops. Companies must go out, collect data, test the model’s performance on it, and continue iterating until the model’s accuracy has improved. These challenges have led many companies to turn to synthetic data as a solution to enhance their data curation efforts. Defined simply, synthetic data is algorithmically generated, rather than being derived from real-world sources. Thanks to advancements in generative AI technology, generating such data has become more accessible, resulting in a surge of platforms dedicated to generating training data for machine learning models. Studies are continuing to show strong results for models trained on synthetic data, and top consulting firms such as Gartner are estimating that by 2030 “synthetic data will completely overshadow real data in AI models.”

It’s not hard to imagine why companies are rushing to incorporate synthetic data into their machine learning lifecycle. The benefits of synthetic data are numerous. Above all, it offers the capability to swiftly produce vast amounts of data, considerably cutting down the time and resources spent on data collection and preprocessing. Furthermore, synthetic data grants developers greater command over data diversity and distribution by quickly allowing AI teams to generate scenarios to test the model’s performance on. This empowers them to meticulously simulate various conditions and scenarios.

But perhaps the most important benefit that synthetic data generation offers is the ability to have data that is more representative of the real world. As previously mentioned, a model's training data molds its understanding of the world, but the real world is dynamic and constantly evolving, rife with exceptions to the norm. A street's appearance shifts continually—weather, vehicles, lighting, and pedestrians all change. Additionally, no two streets are identical. It's one thing to train an autonomous vehicle for a familiar urban street with abundant data. However, "most of the time" isn't adequate when discussing a three-ton car traveling at 60 miles per hour. To ensure operational safety, we must be certain the autonomous vehicle operates correctly almost every single instance. Synthetic data's flexibility allows for the creation of these critical scenarios in large quantities, ensuring the model is thoroughly trained to handle them. This generated data can be used to fine-tune models for specific scenarios, enhancing their robustness and accuracy, and substantially reducing the chances of real-world failures.

However, to truly maximize the benefits of this readily accessible data, a crucial question needs addressing: while we have the capability to generate data, how do we determine what data to generate?

Getting the Most out of Synthetic Data Generative using Manot

At Manot, we have been intently focused on this question long before generative AI became a common term in everyone's vocabulary. Our insight management platform specializes in data curation and model enhancement, aiming to offer insights into the model's performance and pinpoint scenarios where it may not function optimally. When such scenarios are identified, Manot curates data samples that the model is likely to struggle with, commonly referred to as edge cases. By adopting this approach, we offer targeted data curation, emphasizing only the data crucial to your model's accuracy rather than overwhelming users with vast amounts of data that may not necessarily have a positive impact on the model’s performance. Today, we are thrilled to announce that we are bringing this approach to synthetic data generation. We are merging the capabilities of powerful image generation models with Manot’s advanced error prediction and diagnosis system to generate targeted synthetic data for computer vision models.

Building on this vision, we've crafted our solution to amplify the robust potential of generative AI into a holistic approach. Merely producing synthetic data doesn't maximize the technology's full potential. Without clear insight into your model's vulnerabilities, generating such data can replicate inefficiencies inherent in the conventional machine learning lifecycle. Often, vast amounts of non-specific data are produced without pinpointing samples that significantly enhance model accuracy. At Manot, we employ our algorithms to initially discern which data samples will challenge your model, based on a preliminary analysis of the model's performance on a test set. Upon identifying these vulnerabilities, Manot generates edge cases, offering users actionable insight into the model's weak points and aiding in its refinement. By integrating Manot's error prediction into their workflow, AI teams can greatly streamline the model improvement and redeployment cycle, placing a clear emphasis on understanding model behavior from the outset.

Our experience in helping teams shorten their feedback loops associated with model improvement has shown that these tools must be inclusive of all members of the product and engineering teams, which is why our generative AI features are accessible through our UI portal. In addition, our SDK allows for more modularity that engineering teams may require when working with systems such as ours, and includes the option to integrate their preferred image generation model into Manot’s workflow.

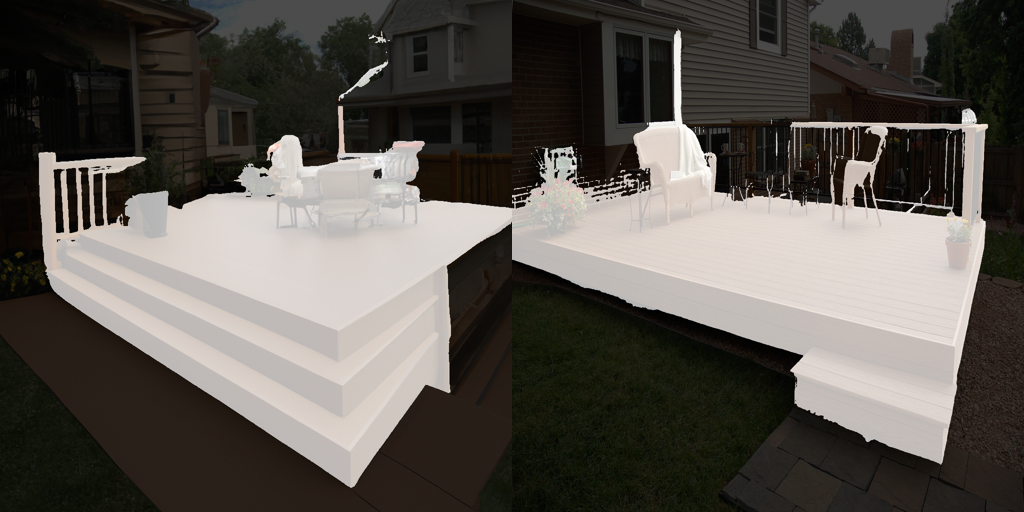

Here are some examples of Manot’s synthetic data generation capabilities applied to a background removal task. As you can see in the example above the predictive model fails to capture the foreground holistically on the original image (on the right), the generated, synthetic variation (on the left) shows the persistence of the problem. Hence Manot is able to provide as much realistic synthetic data as you need to improve your model in scenarios where it will fail.

We can notice the same thing in another example. Where, instead of capturing the furniture on the deck as a foreground the model mistakenly marks the deck itself as a foreground too, which happens in both the original image (on the right) and the synthetic variation (on the left).

Join our Beta

We’re excited to get Manot’s latest generative AI features for targeted synthetic data generation into product manager’s and engineer’s hands as quickly as possible. If you are a product manager or engineer working hard to make computer vision models work in the real world, and think that this feature can help your company with data curation for your models, sign up here for our beta. Or if you have any questions or would like to chat about what we’re working on, drop us a line!